[5]:

import warnings

warnings.filterwarnings('ignore')

Examples#

This notebook contains examples of how to use the SDCC to calculate material properties of particles.

Defining a particle#

A particle in the SDCC is defined by a number of parameters:

TMx is the % titanomagnetite in the compositionPRO is the prolateness of the ellipsoid geometry (major axis/intermediate axis)OBL is the oblateness of the ellipsoid geometry (intermediate axis/minor axis)alignment is the direction of the elongation, either easy for along an easy axis or hard for along a hard axis.T is the temperature at which the surface is being evaluated (optional)ext_field is the external field - [theta,phi,intensity (T)] (optional)Other magnetic minerals and shapes will be available in the future.

Here, we’re going to define a particle as a sphere of magnetite:

[2]:

TMx = 0

alignment = 'hard'

PRO = 1.00

OBL = 1.00

Plotting energy surfaces.#

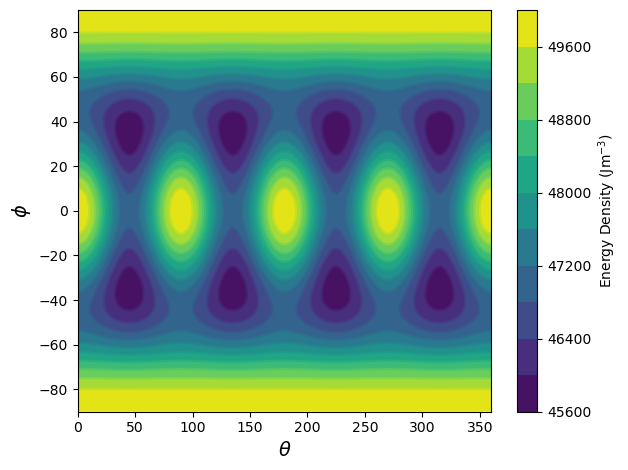

The sdcc.plotting module has several plots for different types of results the SDCC can generate. One of the quickest operations in the SDCC is to plot an energy surface. The plot_energy_surface function can be used to quickly do this for a particle in an equirectangular projection. Note that the coordinates \(\theta\) and \(\phi\) used in this plot correspond to a convention similar to declination and inclination, and not to typical spherical coordinates.

[3]:

from sdcc.plotting import plot_energy_surface

plot_energy_surface(TMx,alignment,PRO,OBL);

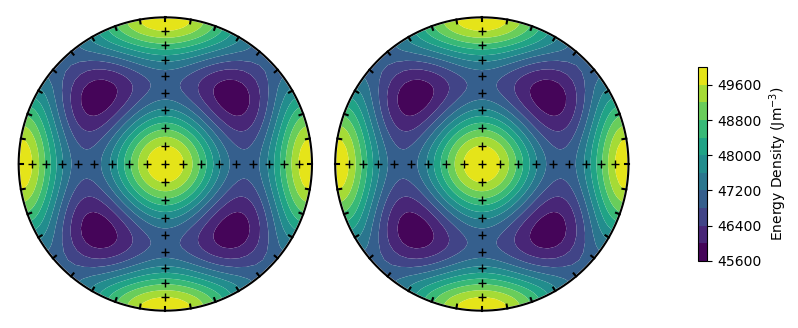

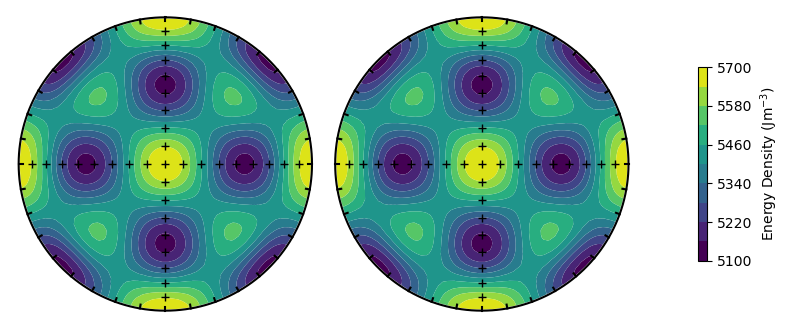

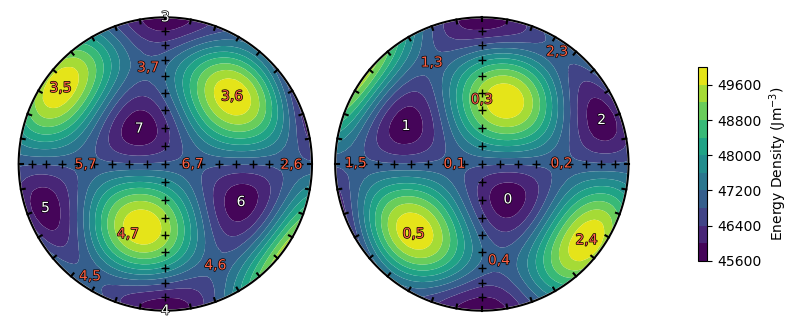

A more useful projection would be to use upper and lower hemisphere stereographic projections, which can be used by passing the argument projection = 'stereo'.

[4]:

plot_energy_surface(TMx,alignment,PRO,OBL,projection = 'stereo');

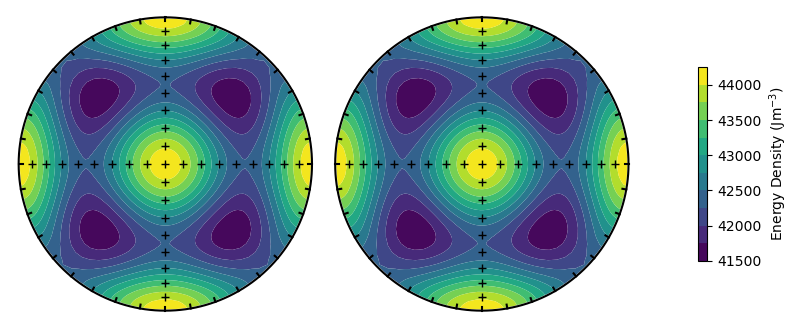

The SDCC can rapidly calculate energy surfaces for particles with different parameters. For example, we can calculate the energy surface for an elongate ellipsoid by increasing the PRO parameter. The SDCC is set up so the major axis is always along \(x\) (or North in the stereographic projection). The minor axis is always along \(z\) (or up) and the intermediate axis is always along \(y\) (or East).

[5]:

PRO = 3.00

plot_energy_surface(TMx,alignment,PRO,OBL,projection = 'stereo');

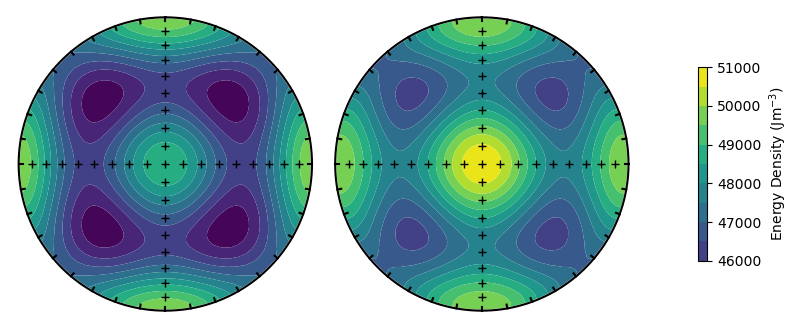

Alternatively, we could change the titanomagnetite composition to see a change in magnetocrystalline anisotropy

[6]:

PRO = 1.00

TMx = 55

plot_energy_surface(TMx,alignment,PRO,OBL,projection = 'stereo');

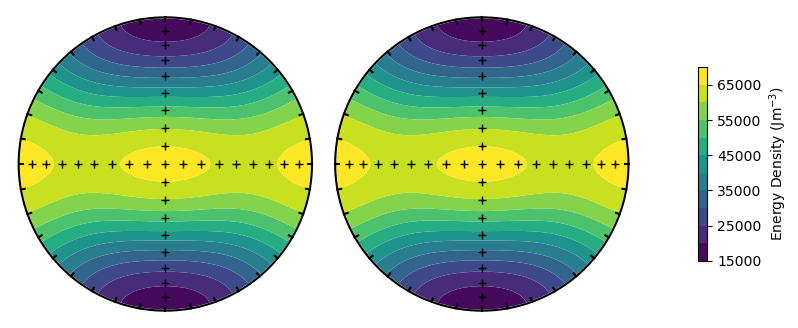

We have not yet changed the temperature or external field. These can be changed using the optional T and ext_field arguments. Changing the temperature does not change the shape of the surface for magnetocrystalline particles, but note the change in the scale on the colorbar compared to our first image.

[7]:

TMx = 0

T = 100

plot_energy_surface(TMx,alignment,PRO,OBL,T = T,projection = 'stereo');

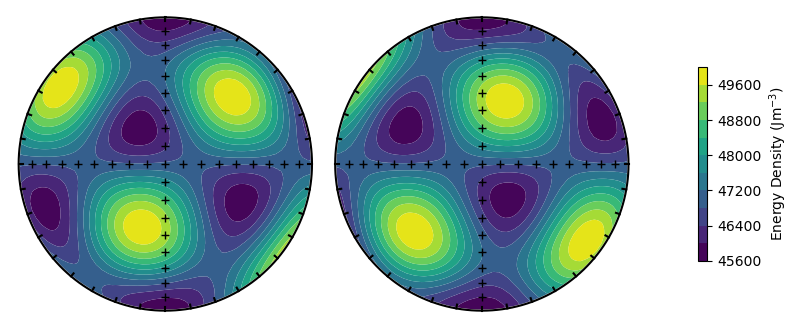

Changing the external field will show a difference between the upper and lower hemisphere projections (as the field differs between the two).

[8]:

ext_field = [0,90,2e-3] #Dec 0, inc 90 (vertically up), 2 mT field

plot_energy_surface(TMx,alignment,PRO,OBL,ext_field = ext_field,projection = 'stereo');

Finally, we can rotate the magnetocrystalline directions using the alignment argument.

[9]:

alignment = 'easy'

plot_energy_surface(TMx,alignment,PRO,OBL,projection = 'stereo');

Calculating energy barriers#

Functions in the barriers module are used to calculate energy barriers. Let’s take the cubic magnetite particle from before.

[10]:

TMx = 0

alignment = 'easy'

PRO = 1.00

OBL = 1.00

We can calculate the LEM states and energy barriers using the find_all_barriers() function. This will output the \(\theta\) and \(\phi\) coordinates of the barriers as well as the energies.

[11]:

from sdcc.barriers import find_all_barriers

lem_t, lem_p, lem_e, bar_t, bar_p, bar_e = find_all_barriers(TMx, alignment, PRO, OBL)

This produces a large number of arrays - a good way to visualize the LEM states and energy barriers is using the plotting.plot_minima() and plotting.plot_barriers() functions.

[12]:

from sdcc.plotting import plot_minima, plot_barriers

plot_energy_surface(TMx, alignment, PRO, OBL, projection = 'stereo')

plot_minima(lem_t, lem_p, projection = 'stereo')

plot_barriers(bar_t, bar_p, projection = 'stereo')

You may notice that there are some spurious energy barriers near energy maxima. These won’t affect our results, as the relaxation times of these spurious barriers are far larger than those of the smaller barriers, which will dominate our results.

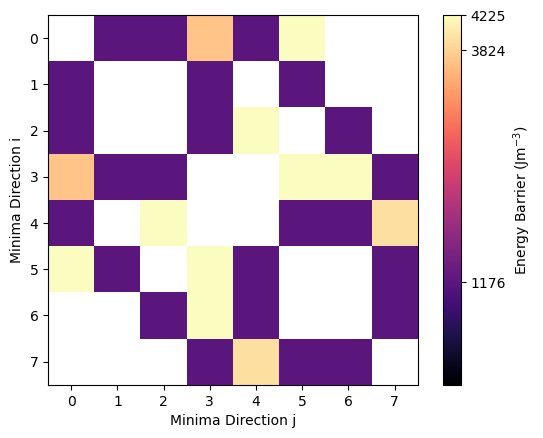

We can also visualize the energy barriers using the energy_matrix_plot function. This function graphically displays the value of the energy barriers between the \(i\)th and \(j\)th state in a matrix.

[13]:

from sdcc.plotting import energy_matrix_plot

energy_matrix_plot(bar_e)

The spurious barriers clearly come up as lighter colors and are 3 - 4 x larger. Given that relaxation time scales with exp(barrier), these will have far longer relaxation times and so will have a minimal effect on our overall relaxation rate.

Saving energy barriers#

We can save the energy barriers between states at all temperatures using the GrainEnergyLandcape or GEL (barriers.GEL) object. Saving one of these objects requires a large number of calculations at each temperature and so may take several minutes. Below is code for saving an elongate magnetite particle to a GEL object, although we comment this out to save time.

[14]:

from sdcc.barriers import GEL

TMx = 0

alignment = 'hard'

PRO = 2.50

OBL = 1.00

#thermParticle = GEL(TMx,alignment,PRO,OBL)

GEL objects can be saved to file using the .to_file() argument. This saves them using the pickle file format. We can load saved GEL files using the pickle module. A large number of precomputed GEL files are available in the particles folder.

[15]:

import pickle

#GEL.to_file('test.gel')

with open('../particles/thermal/TM00_PRO_2.50_OBL_1.00_hard.gel', 'rb') as f:

thermParticle=pickle.load(f)

Similarly a HELs object exists for high-field experiments. These objects need to compute energy barriers in a range of field strengths and field orientations. In some cases, they may take hours to create, but can again be easily saved. HELs objects also require you to input a maximum field (in Tesla).

[16]:

from sdcc.barriers import HELs

#hystParticle = HELs(TMx, alignment, PRO, OBL, B_max = 0.3)

Visualizing energy barriers with temperature#

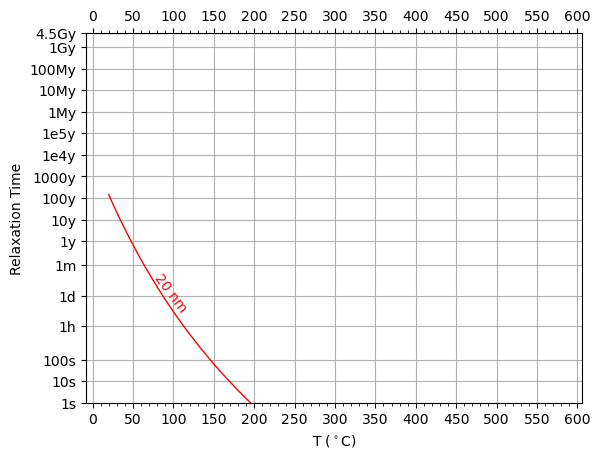

The change in relaxation time with temperature of a GEL object can be visualized with a Pullaiah curve (Pullaiah et. al., 1975). In the SDCC, we can plot these using the plotting.plot_pullaiah_curves() function. To draw a pullaiah curve, we need to specify a size, and we need to give the indices of the energy barrier

[17]:

from sdcc.plotting import plot_pullaiah_curves

sizes = [20] # Pullaiah curve size

i = 0 # state 0

j = 1 # state 1

plot_pullaiah_curves(thermParticle, sizes, 0, 1, color='r')

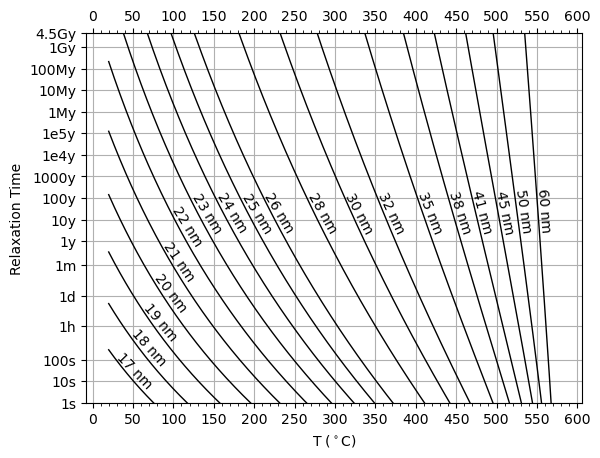

We can plot more curves by specifying more sizes (note this may take a few seconds to run).

[18]:

sizes = [17,18,19,20,21,22,23,24,25,26,28,30,32,35,38,41,45,50,60]

plot_pullaiah_curves(thermParticle, sizes, 0, 1, color='k')

Treatment routines#

Any model being run using the simulations package first requires a set of treatment steps using the sdcc.treatment module. Let’s run a relaxation time experiment using the relaxation_time() function. We need to specify a field direction (in cartesian coordinates) and field strength (in µT).

[20]:

from sdcc.simulation import relaxation_time

B_dir = [1,0,0] #Field direction - along x here

B_str = 40 #Field strength - 40uT

relax_routine = relaxation_time(thermParticle, B_dir,B_str)

Internally, a routine in the SDCC is broken into a list of sdcc.simulation.TreatmentStep objects. We can see these if we print our relaxation time routine.

[21]:

relax_routine

[21]:

[Cooling from 579 to 20°C

in 40 μT field,

VRM acquisition at 20°C

in 0 μT field]

Each of these sub steps has a set of temperature, time and field steps. More complicated routines can be constructed from these, for example a Thellier - Coe experiment. Note that for these experiments, we need to specify both an ancient and lab field, as we need to simulate the history of the pre-history of the particle before the experiment.

[22]:

from sdcc.treatment import coe_experiment

from sdcc.barriers import uniaxial_critical_size

B_anc = 30 #ancient field strength

B_ancdir = [0,0,1] #ancient field direction

temp_steps = [100,200,300,350,400,450,500,520,540,560,579] #Temperature steps for coe experiment

coe_routine = coe_experiment(temp_steps,B_anc,B_str,B_ancdir,B_dir)

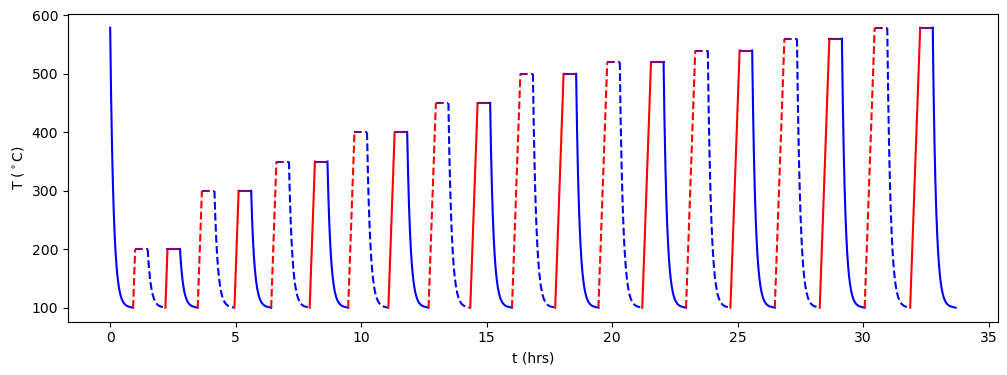

A good way of visualizing an experimental treatment is to make a temperature-time plot of the steps. The sdcc.plotting.plot_routine() function can do this. Blue lines represent cooling steps, red lines heating steps and purple lines have constant temperature. Dashed lines represent zero-field experiments and solid lines represent in-field experiments.

[23]:

from sdcc.plotting import plot_routine

plot_routine(coe_routine)

Running simulations with the SDCC#

Simulations in the sdcc are run using the simulations module. Note that these can be intensive micromagnetic models that may take some time to run.

Each simulation requires an object to store the energy barriers, a treatment routine, and a particle size to run the simulation at.

Viscous relaxation time#

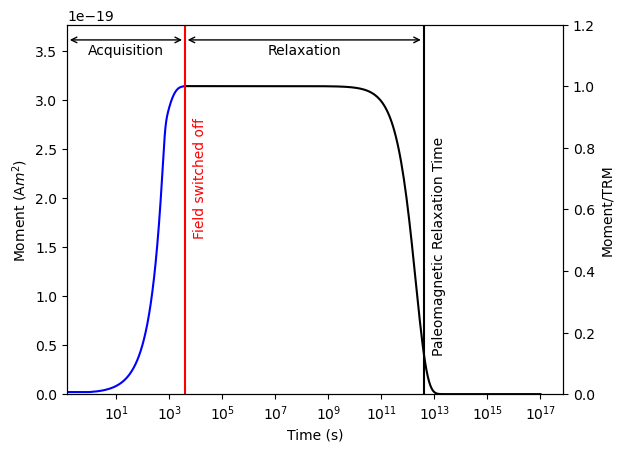

Our first simulation is to simulate a viscous relaxation to obtain a paleomagnetic relaxation time. Our energy barriers and routine were set up earlier - but we’ll set them up again for the purposes of the example. We’re going to run a relaxation time experiment on a 21 nm prolate magnetite particle with a prolateness of 2.50, elongated along the hard axis.

[1]:

import pickle

from sdcc.simulation import relaxation_time

# Read in GEL file for particle

with open('../particles/thermal/TM00_PRO_2.50_OBL_1.00_hard.gel', 'rb') as f:

thermParticle=pickle.load(f)

# Set up treatment routine

B_dir = [1,0,0] #Field direction - along x here

B_str = 40 #Field strength - 40uT

relax_routine = relaxation_time(thermParticle, B_dir,B_str)

# Set up particle size

size = 21 #size in nm

Thermal models in the SDCC are run in a “mono dispersion” of particles in uniformly distributed orientations. For this model, we’ll simulate the viscous relaxation of the same particle in thirty different orientations. This can be done in the sdcc using the sdcc.simulation.mono_dispersion() function. The function outputs a set of magnetic moment vectors that correspond to each time step in each treatment step, as well as a set of probability vectors. Additionally, we need a starting

probability of being in each LEM state as the initial condition of the model. We’re going to start in a demagnetized state. There are two states, so the probability of each is 1/2.

This code (and most of the code cells going forward) may take several minutes to run as these calculations are intensive. If your computer supports parallelized computations with the sdcc - try running the parallelized_mono_dispersion() function instead for better performance.

[26]:

from sdcc.simulation import mono_dispersion

starting_prob = [1/2, 1/2]

moments, probabilities = mono_dispersion(starting_prob,

size,

relax_routine,

thermParticle,

n_dirs = 30)

Working on grain 30 of 30

moments here is a list with two elements. One of these elements corresponds to the initial cooling step, and the other corresponds to the heating step. Each element of the list contains an array with the magnetic moment of the ensemble at each time step.

In the SDCC, we have a specially set up function (sdcc.plotting.plot_relax_experiment) to plot the moment magnitudes at each time step, but this could be done manually.

[27]:

from sdcc.plotting import plot_relax_experiment

plot_relax_experiment(moments, relax_routine)

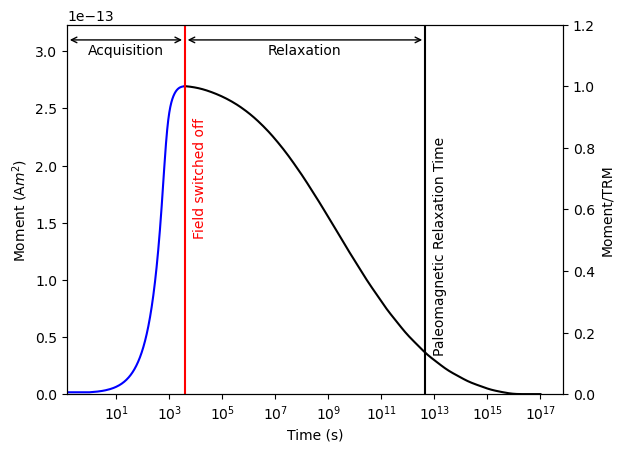

Assemblages of grains.#

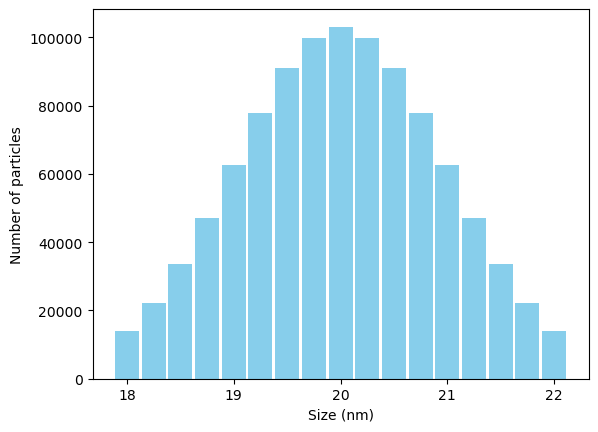

We can work with assemblages of grains by simply taking the viscous relaxation time for each grain and summing up the moments at each time step, multiplied by the number of grains. First, let’s make a gaussian distribution of sizes:

[28]:

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

sizes = np.arange(18,22.25,0.25)

weights = np.exp(-(sizes - 20)**2/2) #Gaussian "weight"

weights = weights/np.sum(weights)*1e6 #Relative number of grains at each size

plt.bar(sizes,weights,color='skyblue',width=0.225);

plt.ylabel('Number of particles')

plt.xlabel('Size (nm)');

We can then loop through our sizes and calculate the moments for each size individually:

[29]:

m_list = []

for size in sizes:

print('\n Current Size:',size)

moments,probs = mono_dispersion(starting_prob,

size,

relax_routine,

thermParticle,

n_dirs=30)

m_list.append(moments)

Current Size: 18.0

Working on grain 30 of 30

Current Size: 18.25

Working on grain 30 of 30

Current Size: 18.5

Working on grain 30 of 30

Current Size: 18.75

Working on grain 30 of 30

Current Size: 19.0

Working on grain 30 of 30

Current Size: 19.25

Working on grain 30 of 30

Current Size: 19.5

Working on grain 30 of 30

Current Size: 19.75

Working on grain 30 of 30

Current Size: 20.0

Working on grain 30 of 30

Current Size: 20.25

Working on grain 30 of 30

Current Size: 20.5

Working on grain 30 of 30

Current Size: 20.75

Working on grain 30 of 30

Current Size: 21.0

Working on grain 30 of 30

Current Size: 21.25

Working on grain 30 of 30

Current Size: 21.5

Working on grain 30 of 30

Current Size: 21.75

Working on grain 30 of 30

Current Size: 22.0

Working on grain 30 of 30

Then we can multiply the moments by the number of particles and sum everything up.

[30]:

weighted_m_list = np.array(m_list).T * weights

m_sum = np.sum(weighted_m_list, axis = 1)

Let’s plot our final result:

[31]:

plot_relax_experiment(m_sum, relax_routine)

Paleointensity Experiments#

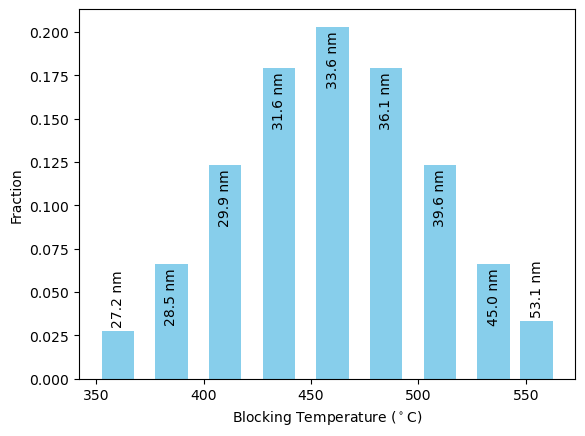

To run a paleointensity experiment, we first need a distribution of particles. For this experiment, it makes sense to first come up with a blocking temperature distribution, and translate that to a size distribution. The sdcc.analysis.get_critical_sizes() function can do this for us.

We can then weight the distribution by its blocking temperatures. Let’s assume the blocking temperature distribution is a normal distribution with a mean of 460\(^\circ\)C and a standard deviation of 50\(^\circ\)C. We’ll weight the average magnetic moments of each of our particles by this amount.

[33]:

from sdcc.analysis import get_critical_sizes

Blocking_Ts = [360,385,410,435,460,485,510,535,555]

sizes = get_critical_sizes(thermParticle, Blocking_Ts)

#Gaussian Distribution

weights = np.exp(-(np.array(Blocking_Ts) - 460)**2 / (2*50**2))

weights /= sum(weights)

#Make bar plot

plt.bar(Blocking_Ts,weights,width=15,color='skyblue');

plt.xlabel('Blocking Temperature ($^\circ$C)')

plt.ylabel('Fraction');

#Plot sizes on bar plot

for i, size in enumerate(sizes):

if weights[i]>0.04:

plt.text(Blocking_Ts[i],weights[i]-0.002,'%2.1f'%size+' nm',ha='center',va='top',rotation=90)

else:

plt.text(Blocking_Ts[i],weights[i]+0.002,'%2.1f'%size+' nm',ha='center',va='bottom',rotation=90)

We can run the model with the distribution as before. Note that due to the much larger number of steps in the Coe experiment, this will likely take a couple of hours to run.

[ ]:

m_list = []

for size in sizes:

print('\n Current Size:',size)

moments,probs = mono_dispersion(starting_prob,

size,

coe_routine,

thermParticle,

n_dirs=30)

m_list.append(moments)

Current Size: 27.245683017354114

Working on grain 30 of 30

Current Size: 28.48332083825177

Working on grain 30 of 30

Current Size: 29.898056082724295

Working on grain 30 of 30

Current Size: 31.55568763857202

Working on grain 30 of 30

Current Size: 33.561743391491746

Working on grain 30 of 30

Current Size: 36.111631548396474

Working on grain 26 of 30

For ease of processing Thellier data to obtain Arai/Zijderveld plot data, we have the function sdcc.analysis.process_thellier_data(). This applies the weights to the different grains and produces Zijderveld and Arai plot data. The Arai plot data can be plotted using the function sdcc.plotting.plot_arai().

[41]:

from sdcc.plotting import plot_arai

from sdcc.analysis import process_thellier_data

Zs,Is,Zs_mag,Is_mag = process_thellier_data(m_list,coe_routine,weights)

plot_arai(Zs_mag/Zs_mag[0], Is_mag/Zs_mag[0], B_anc, B_str, temp_steps)

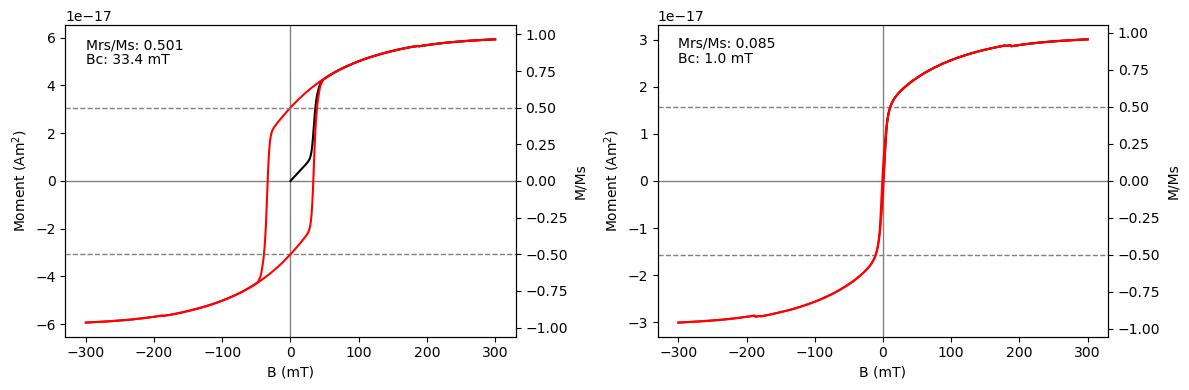

Thermally activated hysteresis#

To do hysteresis calculations, we need to use the sdcc.barriers.HELs object. Let’s import one we made earlier. This particle has the same geometry and composition as in our other simulations.

[2]:

with open('../particles/highfield/TM00_PRO_2.50_OBL_1.00_hard.hels', 'rb') as f:

hystParticle=pickle.load(f)

The routine for hysteresis loops is found at sdcc.treatment.hyst_loop. The routine requires a maximum field (B_max, in T) and a value for the measurement frequency as a function of field (B_step, default is 0.001 T = 1 mT). Let’s do the hysteresis loop and run for two sizes, 16 and 20 nm particle. To run hysteresis and other high-field simulations, we will need to use hyst_mono_dispersion or parallelized_hyst_mono_dispersion as opposed to mono_dispersion.

[6]:

from sdcc.treatment import hyst_loop

from sdcc.simulation import hyst_mono_dispersion

hyst_routine = hyst_loop(B_max = 0.3)

size = 20

size_2 = 16

moments_sd, ps_sd = hyst_mono_dispersion(size, hyst_routine, hystParticle)

moments_sp, ps_sp = hyst_mono_dispersion(size_2, hyst_routine, hystParticle)

Working on grain 50 of 50

Hysteresis data can be analyzed (for Mrs, Ms and Bc) using the sdcc.analysis.analyze_hyst_data() function. This function can also be used to plot the data using the plot = True argument.

[10]:

from sdcc.analysis import analyze_hyst_data

fig,ax = plt.subplots(1,2,figsize=(12,4))

Mrs, Ms, Bc = analyze_hyst_data(moments_sd, hyst_routine, size, hystParticle, plot=True, ax=ax[0])

Mrs2, Ms2, Bc2 = analyze_hyst_data(moments_sp, hyst_routine, size_2, hystParticle, plot=True, ax=ax[1])

plt.tight_layout()

Saving results to file#

Sometimes it’s necessary to save an SDCC result to file. We can use the function sdcc.simulation.result_to_file() function for this. We pass in the particle energy barrier info, size, routine and results, along with a file extension and directory to save all this information in a json-like format. Note that these files can be large (10s of mb) as the results contain large arrays.

[7]:

from sdcc.simulation import result_to_file

result_to_file(hystParticle, size_2, hyst_routine, moments_sp, ps_sp, file_ext = 'hyst', directory = '../results/')

Saved file to ../results/TM00_PRO_2.50_OBL_1.00_16.0nm.hyst

We can load these objects using pickle as we did before with the GEL and HELs objects to see what’s being stored. For the sake of truncating the output, we print only the keys of the moments/probs data as these are large arrays.

[8]:

with open('../results/TM00_PRO_2.50_OBL_1.00_16.0nm.hyst', 'rb') as f:

result=pickle.load(f)

print(result.keys())

print(result['particle'])

print(result['routine'])

print(result['result'].keys())

dict_keys(['particle', 'routine', 'result'])

{'TM': 0, 'alignment': 'hard', 'PRO': 2.498049532966813, 'OBL': 1.0, 'size': 16}

[Hysteresis Branch from 0.0 mT to

300.0 mT in steps of 1.0 mT, Hysteresis Branch from 300.0 mT to

-300.0 mT in steps of 1.0 mT, Hysteresis Branch from -300.0 mT to

300.0 mT in steps of 1.0 mT]

dict_keys(['moments', 'probs'])